OpenAI’s latest innovation, o3-mini, has entered the spotlight, promising a remarkable blend of efficiency and performance. This new reasoning model not only rivals its predecessor, o1, but does so at a fraction of the cost, making it an appealing option in today’s competitive AI landscape. With the rising demand for reasoning models that prioritize accuracy over speed, o3-mini has quickly climbed the ranks in global benchmark evaluations. However, as we delve deeper into its capabilities, we must ask: does o3-mini truly deliver on its promises, or is it simply rehashing familiar concepts with a fresh coat of paint? In this exploration, I put o3-mini to the test, examining its performance through real-world scenarios to uncover the nuances of its reasoning abilities.

Introduction to o3-mini: A New Era of Reasoning Models

OpenAI’s recent launch of the o3-mini reasoning model has stirred excitement in the AI community. This innovative model not only matches the performance of its predecessor, o1, but does so at a significantly lower cost. The o3-mini stands out for its efficiency and speed, quickly climbing the ranks in global benchmark tables, thereby solidifying its place in the competitive landscape of AI reasoning models. Users are increasingly drawn to the promise of greater accuracy and fewer hallucinations in responses.

The demand for advanced reasoning models like o3-mini is more pertinent than ever, as these systems prioritize thoughtful evaluation over instantaneous answers. This deliberate pace, while possibly resulting in longer wait times for answers, aims to enhance the reliability of the information provided. OpenAI’s commitment to developing models that leverage reasoned thinking aligns with growing expectations for AI systems to deliver not just speed, but also quality and dependability.

Frequently Asked Questions

What is o3-mini and how does it compare to its predecessor, o1?

O3-mini is a new reasoning model from OpenAI that matches the performance of o1 while significantly reducing costs. It is praised for its efficiency and speed, ranking highly in global benchmarks.

What are reasoning models and why are they in demand?

Reasoning models are AI systems that evaluate responses before answering, ensuring more accurate results. Their demand stems from the need for reliable and thoughtful AI interactions, especially in complex decision-making.

How can the reasoning level of o3-mini be adjusted?

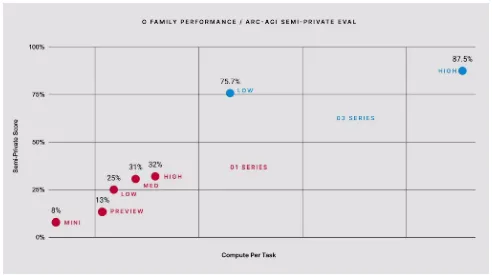

Users can adjust o3-mini’s reasoning level to low, medium, or high settings, allowing them to tailor the model’s response style and depth to fit their needs.

What was the outcome of the ‘Truth or Lie?’ test?

In the ‘Truth or Lie?’ test, o3-mini accurately identified the correct box to choose, demonstrating effective reasoning in both high and low settings, albeit with different processing times and token usage.

What were the results of the ‘Race Fuel’ test using o3-mini?

The o3-mini initially provided the correct fuel calculation in low reasoning mode but failed to do so in high reasoning mode, showcasing variability in performance based on the reasoning level.

What concerns does the performance of o3-mini raise about AI reliability?

The mixed results from tests highlight concerns over AI reliability, indicating that even advanced models like o3-mini can struggle with basic questions, emphasizing the need for caution in AI use.

What implications does the performance of AI models like o3-mini have for future development?

The performance of AI models like o3-mini suggests a gap in achieving true reliability and accuracy, raising questions about the readiness for more complex applications and the potential risks involved.

| Key Points | Details |

|---|---|

| Launch of o3-mini | OpenAI released o3-mini, a new reasoning model that is cost-effective and matches the performance of its predecessor, o1. |

| Performance | o3-mini has been praised for its efficiency and speed and ranks highly in global benchmarks. |

| Reasoning Models | These models evaluate responses rather than giving immediate answers, enhancing accuracy but potentially increasing wait times. |

| Testing Methodology | Tests were conducted at low and high reasoning levels to evaluate quality, value, and utility. |

| Test 1: Truth or Lie? | The o3-mini model correctly identified the box with the keys using logical reasoning. |

| Test 2: Race Fuel | In a fuel calculation scenario, the o3-mini model’s high reasoning mode produced an incorrect answer, highlighting reliability issues. |

| Conclusion | Although o3-mini shows promise, caution is necessary when relying on AI for critical decisions due to potential inaccuracies. |

Summary

o3-mini reasoning model has shown impressive capabilities but also highlights significant challenges in the reliability of AI responses. While it performs well in certain logical tasks, it has demonstrated inconsistencies, particularly in complex calculations. This underscores the ongoing debate about the readiness of AI for crucial decision-making scenarios, reminding us of the need for skepticism and thorough evaluation in AI applications.